This Spotlight highlights a few of the many ways artificial intelligence can be applied to STEM classrooms and education research, from AI-based assessment to intelligent virtual companions.

- Featured Projects:

- AI-based Assessment in STEM Education Conference (PI: Xiaoming Zhai)

- FLECKS: Fostering Collaborative Computer Science Learning with Intelligent Virtual Companions for Upper Elementary Students (PIs: Kristy Boyer and Eric Wiebe)

- Supporting Instructional Decision Making: The Potential of Automatically Scored Three-Dimensional Assessment System (PIs: Xiaoming Zhai, Joseph Krajcik, Yue Yin, Christopher Harris )

- Supporting Teachers in Responsive Instruction for Developing Expertise in Science (PIs: Marcia Linn and Brian Riordan)

- Using Natural Language Processing to Inform Science Instruction (PIs: Marcia Linn and Brian Riordan)

- Related Resources

Featured Projects

AI-based Assessment in STEM Education Conference: Potential, Challenges, and Future

AI-based Assessment in STEM Education Conference: Potential, Challenges, and Future

PI: Xiaoming Zhai

Grade Levels: K-College

STEM Discipline: Science, Technology, Engineering, and Mathematics

This NSF-funded conference invited experts in assessment, Artificial Intelligence (AI), and science education to the University of Georgia for a two-day conference to generate knowledge of integrating AI in science assessment. The Framework for K-12 Science Education has set forth an ambitious vision for science learning by integrating disciplinary science ideas, scientific and engineering practices, and crosscutting concepts so that students could develop competence to meet the STEM challenges of the 21st century. Achieving this vision requires transforming assessment practices from relying on multiple-choice items to performance-based knowledge-in-use tasks in which students apply the knowledge. Such novel assessment tasks involve using knowledge to solve problems and explain phenomena and track students' learning progression so that teachers can adjust instruction to meet students' needs. However, these performance-based constructed-response items often prohibit timely feedback, which, in turn, has hindered science teachers from using these assessments. AI has demonstrated the potential to meet this assessment challenge. Therefore, the conference intended to address three goals: (a) presenting cutting-edge research on AI-based assessments in STEM; (b) identifying the challenges of applying AI in science assessments; and (c) mapping the future direction of AI-based assessment research.

Forty-one researchers from the US, Europe, and Asia participated in the conference. Six of these researchers accepted invitations to present keynote talks. Each speaker delivered remarks around the five themes of the conference: (a) AI and domain-specific learning theory; (b) AI and validity theory and assessment design principles; (c) AI and technology integration theory; (d) AI and pedagogical theory focusing on assessment practices; and (e) AI and big data. Of the 41 participants, 10 were doctoral and post-doctoral researchers who received mentorship and guidance on future research efforts from distinguished researchers. In addition, participants shared and discussed theoretical perspectives, empirical findings, and research experiences. The conference also helped identify challenges and future research directions to increase the general use of AI-based assessments in science education. Finally, the conference supported participants in establishing a network in this emergent area of science assessment.

Related Findings: The project identified research gaps and challenges in five aspects: (a) AI and domain-specific learning theory; (b) AI and validity theory and assessment design principles; (c) AI and technology integration theory; (d) AI and pedagogical theory focusing on assessment practices; and (e) AI and big data. In addition to the networking and research partnerships established, these findings will be documented in a forthcoming edited book titled "Uses of Artificial Intelligence in STEM Education." Upon initial review of the book and the submitted 20+ chapters (currently under second revision), Oxford University Press extended a contract to publish the book. Publication of the book will occur in the summer of 2023. The publisher deemed the book of "high academic interest," and initial reviewers gave excellent commentary and suggestions to the members of the conference and authors of the chapters.

Products:

Websites

- http://ai4stem.uga.edu/project.html

- http://ai4stem.uga.edu/conference.html

- http://ai4stem.org/aiconference/

- https://coe.uga.edu/news/2022-06-international-conference-of-ai-based-assessment-in-stem-held-at-uga

Publication

- Zhai, X. & Krajcik, J. (2023). Uses of Artificial Intelligence in STEM Education. Oxford University Press. (To be published Summer of 2023.)

FLECKS: Fostering Collaborative Computer Science Learning with Intelligent Virtual Companions for Upper Elementary Students (Collaborative Research)

FLECKS: Fostering Collaborative Computer Science Learning with Intelligent Virtual Companions for Upper Elementary Students (Collaborative Research)

PIs: Kristy Boyer and Eric Wiebe

Grade Levels: 4-5

STEM Discipline: Computer Science

The FLECKS project supports the development of transformative learning experiences for children through collaborative learning in computer science. The FLECKS system leverages intelligent virtual learning companions that model crucial dimensions of healthy collaboration through dialogue to scaffold students in collaborative coding activities.

This project is based on a growing awareness that children not only can learn computer science but should be afforded opportunities to do so. As computer science is an inherently collaborative discipline and the importance of collaboration is emphasized in the new K-12 Computer Science Framework, it is equally important that children learn effective collaboration practices. The project addresses the research question, "How can we support upper elementary-school students in computer science learning and collaboration using intelligent virtual learning companions?" To address this objective, we engaged in cycles of iterative refinement that included data collection, design and development, and analyzing evidence, centering around the following three research and development thrusts:

- Collect datasets of collaborative learning for computer science in diverse upper elementary school classrooms to ground-truth students’ collaborative approaches to computer science learning. Our work is situated in schools serving diverse populations in both Florida and North Carolina.

- Design, develop, and iteratively refine an intelligent learning environment with virtual learning companions supporting student dyads as they learn computer science and collaborative practices. In the FLECKS system, children engage in a series of debugging activities in which they access a block-based program where they are told how the program should function. Then they work in pairs to identify where the problem is and fix it. The virtual learning companions adapt to the students’ patterns of collaboration and problem solving to provide tailored support and model crucial dimensions of healthy collaborative dialogues.

- Generate research findings about how children collaborate in computer science learning and how best to support their collaboration with intelligent virtual learning companions. These research efforts have continued to develop our understanding of best practices for CS education with elementary school students as well as dyadic interactions during collaborative CS learning. These studies and research analyses have also informed the iterative design and development of the FLECKS intelligent learning environment, as this year has seen substantial improvements in the system's capabilities.

The project team has studied collaborative practices through dialogue analysis for the target collaboration strategies. Using speech, dialogue transcripts, code artifact analysis, and multimodal analysis of gesture and facial expression, the team has conducted sequential analyses that identify the virtual learning companion interactions that are particularly beneficial for students, focusing our development efforts on expanding and refining those interactions. This analysis also identifies the affordances that students did not engage with to help us determine whether to eliminate or revise them. The analytics of collaborative process data is also triangulated with qualitative classroom data from field notes, focus groups, and semi-structured interviews with students and teachers. The themes that emerge are used to guide subsequent refinement of the environment and learning activities.

Related Findings:

- This project provides important insights into the collaborative process of young children in CS problem solving. For example, we found three types of dialogues related to suggestions (i.e., Proposal, Command, and Next Step) and four types of responses to those suggestions (Tsan et al., 2018, Tsan et al., 2018). We compared children’s collaborative talk across different collaborative configurations (e.g., One and Two-computer configurations) and identified qualitative evidence of how differing affordances of the two configurations shape collaborative elementary students’ practices (Zakaria et al., 2019). We identified four types of dialogues when conflicts emerge during children’s collaborative processes (i.e., Task Conflict, Control Conflict, Partner Roles/Contribution Conflict, and Other) (Tsan et al, 2021). We also found that there is a cyclical nature to dialogue between children during pair programming: they spend most of their time in an exploratory talk state, but when they face confusion, students enter a back-and-forth discourse of directives and self-explanations. These findings hold great promise in helping educators promote successful collaborative dialogues among their students by identifying best practices, informing instructional practice, and designing and developing adaptive tools to support such collaboration.

- This project provides valuable experience in the participatory design and iterative refinement of virtual learning companions for children. A significant challenge arises when supporting the ideation of children in collaboration with adult designers due to the power differential children perceive between themselves and adults, which could lead to children not feeling a sense of ownership within the design process. In our initial design phase, we aimed to understand elementary learners’ perceptions of “good collaboration” and depict their understanding through an iterative drawing methodology. We identified aspects of each drawing and noted commonalities across drawings (Wiggins et al., 2019). Next, we conducted a new study investigating how children would build agents on the drawings derived from the previous study. Finally, we were able to examine different graphical representations of the agents with children and eventually create higher-fidelity agent prototypes. These findings provide actionable design guidelines for the co-design process of virtual agents’ personas and dialogues with children.

- This project reveals the great potential for virtual learning companions to model collaborative talk for children. By investigating elementary children’s collaborative behavior after interacting with virtual agents, we found an association between elementary learner dyads’ positive changes, affective reactions, and their attentiveness in collaboration after agent interventions (Wiggins et al., 2022). We also found that the learning companions fostered more higher-order questions and promoted significantly higher computer science attitude scores than a control condition. These findings suggest ways in which intelligent virtual agents may be used to promote effective collaborative learning practices for children, and provide directions for much-needed future investigation on virtual learning companions’ interactions with young learners.

Product(s):

Website: www.flecksproject.org

Video: https://drive.google.com/file/d/1NYHMDU_LqJbQbSg8WktI4DBdmu8oNxxh/view

Publications:

- Wiggins, J. B., Earle-Randell, T. V., Bounajim, D., Ma, Y., Ruiz, J. M., Liu, R., ... & Boyer, K. E. (2022, September). Building the dream team: children's reactions to virtual agents that model collaborative talk. In Proceedings of the 22nd ACM International Conference on Intelligent Virtual Agents (pp. 1-8).

- Vandenberg, J., Lynch, C., Boyer, K. E., & Wiebe, E. (2022). “I remember how to do it”: exploring upper elementary students’ collaborative regulation while pair programming using epistemic network analysis. Computer Science Education, 1-29.

- Ma, Y., Martinez Ruiz, J., Brown, T. D., Diaz, K. A., Gaweda, A. M., Celepkolu, M., Boyer, K. E., Lynch, C. F., & Wiebe, E. (2022, February). It's Challenging but Doable: Lessons Learned from a Remote Collaborative Coding Camp for Elementary Students. In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 1 (pp. 342-348).

- Vandenberg, J., Lynch, C., Boyer, K. E., & Wiebe, E. (2022). “I remember how to do it”: exploring upper elementary students’ collaborative regulation while pair programming using epistemic network analysis. Computer Science Education, 1-29.

- ...view more publications

Supporting Instructional Decision Making: The Potential of Automatically Scored Three-Dimensional Assessment System (Collaborative Research)

PIs: Xiaoming Zhai, Joseph Krajcik, Yue Yin, and Christopher Harris

PIs: Xiaoming Zhai, Joseph Krajcik, Yue Yin, and Christopher Harris

Grade Levels: 6-8

STEM Discipline: Physical Science

The PASTA project aims to tackle the challenges of engaging students in science assessment practices that integrate three dimensions (3D) of scientific knowledge – science and engineering practice, disciplinary core ideas, and crosscutting concepts. Led by scholars from the University of Georgia, Michigan State University, the University of Illinois at Chicago, and WestEd, the project team collaboratively develops valid and robust AI and machine learning algorithms to automatically assess three-dimensional student performance. To use the assessment information in a meaningful way, the team further focuses on developing instructional strategies to support teachers' assessment practices. By using the assessments, our system, and instructional strategies, we expect that teachers will develop pedagogical content knowledge to implement 3D science assessment tasks.

This project builds upon our prior projects in which we developed three-dimensional assessments aligned with the Next Generation Science Standards (led by Christopher Harris, Joseph Krajcik, and James Pellegrino), as well as Artificial Intelligence (AI) technologies that can automatically score students' constructed responses (led by Xiaoming Zhai). The project has three goals: (a) develop automatically generated student reports (AutoRs) for 3D science assessments to assist middle-school teachers in noticing, attending to, and interpreting information in ongoing classroom teaching, (b) develop effective instructional strategies to improve teachers' use of AutoRs to make effective decisions for instructional moves to improve students' learning and thereby improving their pedagogical content knowledge, and (c) examine the effectiveness of AutoRs and the instructional strategies to support teachers' decision making and student 3D learning.

The collaborative project will unfold over three phases. In the first phase, we will design AutoRs and explore how to present information on knowledge-in-use assessment tasks to support teachers' immediate instructional decision-making. The team used ten NGSS-aligned assessment tasks designed by the NGSA project to assess middle school students' performance expectations on chemical reactions. The research team developed 10 diagnostic rubrics for the assessment tasks and employed four types of algorithms to build scoring models for the assessment tasks. The machine-human agreements are above benchmarks.

The project team developed a human-centered design framework to guide the development of AutoRs and designed several templates for AutoR. To access and use, the AutoRs include essential information presented in various ways. The team has worked with middle school teachers using cognitive labs to explore teachers' interpretations and perceptions of the three types of AutoRs. Finally, the team developed a mobile application, the AI-Scorer, for use on IOs, Android, and web browsers. The AI-Scorer can automatically score students' written responses and present AutoRs to teachers.

In the second phase, the research team will design instructional strategies and study what features of instructional strategies can support teachers' use of AutoRs to make effective instructional decisions. To support teachers' use of AutoRs, the team will develop instructional strategy supports to use in classroom settings. We aim to improve teachers' pedagogical content knowledge of classroom assessment practices using the AutoRs.

Phase three will pilot test the AutoRs and the instructional strategies supporting students' science learning and teachers' instructional decision-making.

Related Findings:

1. Teachers' interpretation and use of AutoRs for instructional decisions.

Our preliminary analysis of the cognitive interviews suggests that teachers tried to connect the information from different sources to help them deepen their understanding of students' learning. Their interpretations did not depend on the purpose of using the assessment tasks; instead, they depended on the classes they taught. The results also suggest that teachers' instructional decisions depend on how the AutoRs are contextualized instead of the scores provided.

2. Preliminary results on instructional strategies

We created a design model for developing instructional strategies with five steps: (1) clarifying learning goals, (2) characterizing student performances on 3D assessment tasks, (3) identifying student academic, social, and cultural backgrounds, and (4) specifying culturally appropriate instructional strategies for teachers to use in their classes, and (5) developing supports for teachers adapting their instructional practices to meet student learning needs. We followed the design model to develop four types of instructional strategies, including firsthand experience, second-hand experience, online simulation, and multimodal representations. We collected and analyzed teacher interview data of their feedback about our designed strategies. We used thematic analysis and found four emerging themes from the data: goal orientation, feasibility, cultural relevance, and inclusion and fairness. Our preliminary results suggest that expert teachers perceived that the instructional strategies could support student performance on the 3D assessment task. Their feedback on goal orientation, feasibility, cultural relevance, inclusion, and fairness provides valuable feedback to revise the initial instructional strategies.

The results of six experienced teachers' interviews show that their choice of inclusive strategies differed based on their school and student backgrounds. The teachers with ELL students in their classrooms focused on language use. In contrast, the teachers from low SES schools considered accessibility of instructional materials and technologies as ways of providing equal opportunities. Small group activities are essential for inclusion.

We also made use of the thematic analysis to refine the instructional strategies. Five themes emerged:

- Contextualization was viewed as important and tied to relevant phenomena and familiar objects.

- Hands-on experiences through manipulating, collecting, and observing data were deemed essential for students.

- Starting with simple and building to complex ideas using similar activities over an instructional period was thought to help students gain proficiency.

- Attending to language, learning modalities, and accessibility was perceived to foster fairness and equity.

- Emphasizing inclusive strategies differed among teachers based on their school and student backgrounds.

Product(s)

Website: https://ai4stem.org/pasta/

Selected Publications:

- Zhai, X., He, P., & Krajcik, J. (2022) Applying machine learning to automatically assess scientific models. Journal of Research in Science Teaching. 59 (10), 1765-1794. http://dx.doi.org/10.1002/tea.21773

- He, P., Shin, N., Zhai, X., & Krajcik, J. (2023). Guiding Teacher Use of Artificial Intelligence- Based Knowledge-in-Use Assessment to Improve Instructional Decisions: A Conceptual Framework. Uses of Artificial Intelligence in STEM Education Zhai, X., & Krajcik, J. Under Review.

- He, P., Zhai, X., Shin, N., & Krajcik, J. (2023). Using Rasch Measurement to Assess Knowledge-in-Use in Science Education. Advances in Applications of Rasch Measurement in Science Education Liu, X. & Boone, W. Springer Nature. Under Review.

Selected Presentations:

- Zhai, X. (2022). AI Applications in Innovative Science Assessment Practices. Invited Talk. Faculty of Education, Beijing Normal University.

- Panjwani, S. & Zhai, X. (2022). AI for students with learning disabilities: A systematic review. International Conference of AI-based Assessment in STEM. Athens, Georgia.

- Latif, E., Zhai, X. (2023). AI-Scorer: Principles for Designing Artificial Intelligence-Augmented Instructional Systems. Annual Meeting of the American Educational Research Association. Chicago. Under Review.

- Latif, E., Zhai, X., Amerman, H., & He X. (2022). AI-Scorer: An AI-augmented Teacher Feedback Platform. International Conference of AI-based Assessment in STEM. Athens, Georgia.

- Zhai, X. (2022). AI-based Assessment. STEAM-Integrated Robotics Education Workshop. The University of Georgia & Daegu National Univers.

- Weiser, G. (2022). Actionable Instructional Decision Supports for Teachers Using AI-generated Score Reports. 2022 annual conference of the National Association of Research in Science Teaching. Vancouver, BC, Canada.

Supporting Teachers in Responsive Instruction for Developing Expertise in Science (Collaborative Research)

PIs: Marcia Linn and Brian Riordan

Grade Levels: 5-12

STEM Discipline: Earth, Life and Physical Science, Data Science, Computational Thinking

When instruction responds to the diverse needs of each student it increases engagement in science and improves learning outcomes. To help teachers take advantage of their students' evolving ideas and understandings, STRIDES uses advanced technologies such as natural language processing to analyze logged student work while they study Web-based Inquiry Science Environment (WISE) units. Summaries of the written explanations help teachers respond to their students’ ideas in real-time. To help teachers use the summaries, the unit suggests research-proven instructional strategies. The researchers study how to design the summaries, how teachers make use of the summaries in customizing their instruction, and how students benefit.

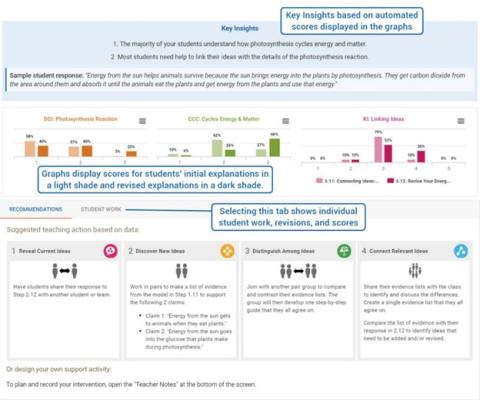

Adapting curriculum to respond to students’ developing ideas has long been viewed as a hallmark of effective science instruction. Providing information about their students that teachers can use use to customize the curriculum is more problematic. Teachers report that the assessment measures do not align with their own personal metrics for determining student success. Others found teachers needed guidance to interpret this data. Teachers are challenged to connect student data to actionable changes to instruction. STRIDES develops a new WISE interface called the Teacher Action Planner (TAP) to support teachers to diagnose student understanding and customize the curriculum to respond to students’ developing understanding. The TAP builds on technologies tested in prior work: WISE units that address the NGSS and support automated scoring of student constructed responses; a teacher dashboard that illustrates student progress and supports teachers to grade and assign guidance to individual workgroups; authoring tools that are usable by a novice and support efficient customization of units with features such as rich-text editing, pedagogically driven templates to design steps in a unit, and importing of steps from other WISE units; and a WISE environment that logs teacher and student actions and revisions. The TAP illuminates patterns in the automatically scored student data; and connects the patterns to research-based customization choices, thus enabling teachers to adapt the curriculum in response to their students’ ideas (Figure 1: Teacher Action Planner). The TAP also provides teachers with a synthesis of logged data illustrating the impact of their customization activity.

STRIDES has developed six curriculum units with TAPs for short essay embedded assessments. The TAP in each of these units illustrates, in real time, for teachers the progress their students are making in integrating cross-cutting concepts to form a coherent explanation of core disciplinary ideas. We explore how the TAP supports teachers to customize the unit in response to their students’ evolving learning needs, and the impact of customization on science learning outcomes for students. We also study how the cyclical engagement in customization and reflection mediated by the TAP strengthens teachers’ pedagogical content knowledge and teaching practice.

|

Image

|

Related Findings: (Excerpts from: Linn, M.C., Gerard, L.F., & Donnelly-Hermosillo, D. (in press). Synergies between learning technologies and learning sciences: Promoting Equitable Secondary Science Education. Handbook of Research on Science Education Volume III, Routledge Press.)

Example Dashboard.

The Teacher Action Planner (TAP) summarizes class-level learning analytics to support teachers in assessing and guiding student knowledge integration in pre-college science. As shown in the Figure, the TAP is generated based on the automated assessment, using natural language processing, of student written explanations. In this example, the explanations are in response to a prompt embedded in a 10-day web-based inquiry science unit on Photosynthesis and Cellular Respiration. In the unit, students experiment with interactive visualizations to discover how matter and energy flow and transform inside of a plant. Students encounter this question, how does the sun help animals to survive, midway through the unit to help them use evidence from the visualizations to form an explanation. For this embedded assessment, students received one round of automated, adaptive guidance and had the opportunity to revise. The adaptive computer guidance, included a personalized hint targeting a missing or incorrect idea, with a link to relevant evidence. The students’ initial and revised explanations are automatically assessed using NLP, based on the NGSS dimensions (DCI, CCC) associated with the target Performance Expectation (PE) and the linking of those ideas (knowledge integration scoring).

The histograms illustrate the class’s average initial score on their explanation, and their revised score after using the automated guidance to revise. This TAP highlights student needs both in terms of developing the 3-dimensional understanding called for by NGSS and in the scientific practice of revision. Capturing and displaying student revision in the TAP was a design iteration developed in response to teacher interviews. Teachers wanted to take advantage of computer-based, individualized guidance and the TAP rather than use the TAP alone. They wanted to use the TAP to target their assistance to those students who still needed help, after students had received one round of computer guidance. They also wanted to see how their students were modifying their explanations with guidance - rather than only seeing their final response. The recommendations, another feature of the TAP dashboard, are adaptive suggested instructional actions to correspond to the pattern in the class scores.

A recent investigation examined the interventions that ten middle school science teachers implemented when using the TAP in their classrooms. The ten teachers reflected on the TAP analytics and recommended actions after teaching a lesson. They used the information to redesign their lesson for the next day. Each of the teachers provided a targeted small or whole group intervention. While most of the participating teachers’ interventions supported discovering new ideas to fill identified gaps, few of the teachers also supported students to distinguish between their initial ideas in their explanation and the new evidence presented or to link new ideas (Gerard et al., 2020). Most interventions for example focused on reviewing evidence from a computer model in the unit in a mini-lecture. Guiding students to distinguish among their ideas and those presented by instruction is crucial to equitable instruction as it positions the student as an arbiter of knowledge and encourages them to use evidence to sort out and link ideas.

Videos:

Publications:

- Linn, M.C., Gerard, L.F., & Donnelly-Hermosillo, D. (in press). Synergies between learning technologies and learning sciences: Promoting Equitable Secondary Science Education. Handbook of Research on Science Education III. Routledge Press. https://drive.google.com/file/d/1Zfx2CMSn37XusCzHsjgLCKfAQMob_iou/view?usp=sharing

- Wiley, K., Gerard, L., Bradford, A., & Linn, M.C. (in press). Empowering teachers and promoting equity in science. Handbook of Educational Psychology. Oxford Press. https://drive.google.com/file/d/187eZIBiNmatycNAVTUKd9YLdV3lSx3xK/view?usp=sharing

- Gerard, L., Bradford, A., & Linn, M. C. (2022). Supporting Teachers to Customize Curriculum for Self-Directed Learning. Journal of Science Education and Technology. https://doi.org/10.1007/s10956-022-09985-w

- Gerard, L., Wiley, K., Debarger, A. H., Bichler, S., Bradford, A., & Linn, M. C. (2022). Self-directed Science Learning During COVID-19 and Beyond. Journal of Science Education and Technology, 31(2), 258–271. https://doi.org/10.1007/s10956-021-09953-w

- Bichler, S., Gerard, L., Bradford, A., & Linn, M. C. (2021). Designing a Remote Professional Development Course to Support Teacher Customization in Science. Computers in Human Behavior. https://doi.org/10.1016/j.chb.2021.106814

Presentations:

- Bradford, A., Bichler, S., & Linn, M.C. (2021). Designing a Workshop to Support Teacher Customization of Curricula. Proceedings of 15th International Conference of the Learning Sciences – ICLS 2021. International Society of the Learning Sciences, 2021 https://repository.isls.org/bitstream/1/7506/1/482-489.pdf

- Gerard, L., Bichler, S., Billings, K., & Linn, M.C. (2022). Science Teachers Use of a Learning Analytics Dashboard To Design Responsive Instruction. Paper accepted for presentation at the American Education Research Association annual meeting

- Billings, K., Gerard, L., & Linn, M.C. (2021). Improving teacher noticing of students’ science ideas. In E. de Vries, J. Ahn, & Y. Hod (Eds.), Proceedings of 15th International Conference of the Learning Sciences – ICLS 2021 (pp. 100–115). International Society of the Learning Sciences, 2021 https://repository.isls.org//handle/1/7379

- ...view more presentations

Using Natural Language Processing to Inform Science Instruction (Collaborative Research)

PIs: Marcia Linn and Brian Riordan

Grade Levels: 5-12

STEM Discipline: Earth, Life and Physical Science, Data Science, Computational Thinking

Middle school science teachers want technology to amplify their impact on the hundreds of students with multiple, diverse ideas in their classes. By exploiting advances in natural language processing (NLP) this project detects the rich ideas that students develop during everyday experience. The project takes advantage of NLP methods for detecting student ideas to design adaptive guidance that gets each student started on reconsidering their own ideas and pursuing deeper understanding. This work continues a successful partnership between University of California, Berkeley, Educational Testing Service (ETS), and science teachers and paraprofessionals from six middle schools enrolling students from diverse racial, ethnic, and linguistic groups whose cultural experiences may be neglected in science instruction. The partnership conducts a comprehensive research program to develop NLP technology and adaptive guidance that detects student ideas and empowers students to use their ideas as a starting point for deepening science understanding. Prior work in automated scoring has primarily identified incorrect responses to specific questions or the overall knowledge level in a student explanation. This project identifies specific ideas in open-ended science questions. Moreover, the idea detection technology goes beyond a student’s general knowledge level, to adapt to a student's cultural and linguistic entry points into the topic.

By leveraging advanced NLP technologies, NLP-TIPS detects key ideas and focuses the student on the mechanism behind the idea. For example, in a 7th grade unit on Plate Tectonics, students were asked how Mt. Hood in Oregon was formed. One student responded, “Mountains are formed by the ice when it snows.” NLP-TIPS, after using NLP to detect the “formed by snow” idea could explore the idea behind the explanation with questions such as “Is the mountain shorter when the snow melts?”, or “How might a mountain form in a warm place without snow?”. Each of these questions prompts the student to recognize their idea as a scientific conjecture open to empirical investigation. It prompts the student to evaluate their knowledge and construct a method to distinguish among alternatives, a core principle of constructivist learning.

TIPS adaptive guidance is designed using the knowledge integration (KI) instructional framework (Linn & Eylon, 2011). KI describes learning as a process of using evidence to distinguish among ideas gathered in different contexts including everyday experiences and science instruction. Students formulate and test links among ideas to refine their ideas. NLP-TIPS aligns two guidance structures for leveraging NLP detected ideas with KI: adaptive dialog and peer interaction. The adaptive dialog combines NLP idea detection and the KI framework to design adaptive prompts that advance students’ science learning and encourages their efforts to distinguish among ideas. The peer interaction structure uses the NLP idea detection and the KI framework to group peers and encourage distinguishing ideas. The agent selects students who have expressed ideas that are optimal for collaborative learning because they display: conflicting mechanisms to spark debate; an idea that is missing from the other’s explanation to promote connections; or ideas that share a similar mechanism but expressed differently to encourage clarifying and refinement.

Related Findings:

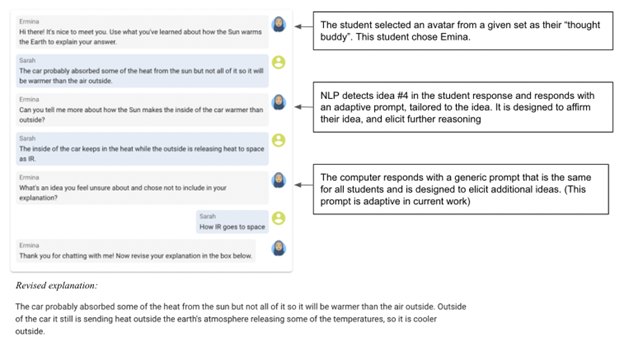

Example Adaptive Dialog

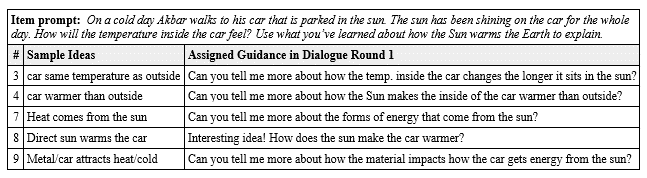

In a unit on Urban Heat Islands, an AI-based dialog supports teachers to elicit student ideas. First, the student selects an avatar from a given set of avatars that are designed to reflect the racial and gender diversity of the class. Next, the student’s selected avatar starts a conversation by asking the student a KI explanation prompt, eliciting their ideas about the mechanisms in a heat island (see Figure 1): “How does the temperature inside a car that has been parked in the snow on a sunny day feel?”. The student types and submits their response. Students often express their initial ideas based on their experiences such as “the air is warmer inside the car because it had no contact with the cold air outside”, or “I think that the car will be hotter, because it was parked in the sun area,” or “The dark seats of the car make it hot”. Using state of the art natural language processing techniques, the avatar detects an idea in the student response and responds. The avatar’s response affirms the student’s detected idea and asks a question tailored to further elicit the student’s reasoning underlying their idea. This approach is modeled based on expert teacher guidance and research on guidance. This sequence repeats for two talk turns between student and avatar. Then, the student is prompted to revise their initial explanation response based on the new ideas they gathered from the dialog.

|

Early research suggests that the adaptive prompts in the dialog encouraged students to reflect on prior experiences to consider new variables and raise scientific questions. In one study, 66 9th grade students engaged in the Car on a Cold Day dialog at the start of the unit [prior to instruction]. The majority of the students identify as a part of historically marginalized racial, linguistic and socioeconomic groups (97% Latinx; 95% of parents speak a language other than English at home; 85% eligible for a free or reduced price lunch).

We investigated two research questions: (1) How accurate is the NLP technology for detecting ideas students hold about an open-ended science dilemma?, and (2) How do students respond to adaptive guidance that promotes integrated revision?

To train the NLP scoring model, a corpus of 1000+ student explanations collected in prior research from 5 schools, including demographics similar to the school in this study, was used. Two researchers developed a 5-point Knowledge Integration rubric to score the student explanations for ‘Car on a Cold Day', which rewards students for linking normative, relevant ideas. The researchers also identified the distinct ideas expressed by students (21 ideas, Table 1). One student explanation could include multiple ideas. The researchers refined the rubrics until they achieved inter-rater reliability (Cohen’s Kappa > .85). Then, each researcher coded 50% of the explanations assigning a KI score, and a tag for each distinct idea within each explanation. Data was used to train an NLP model.

|

To build the NLP model, we used a word classification or sequence labeling approach (Riordan et al., 2020). First, the words in each response are transformed into numerical vectors with state-of-the-art language models that have been previously trained to capture latent relationships in the English language via the self-attention mechanism. Second, for each word, idea detection is cast as a multi-label classification problem. Consecutive word-level predictions of a targeted idea form a predicted idea span. The idea detection model was trained, evaluated, and deployed with 10-fold cross validation for hyperparameter tuning and evaluation. Final model building used all available data. Adaptive dialogue prompts for each idea were designed to engage students in conversation, modeling an integrated revision process and promoting science understanding.

Findings

To evaluate the NLP model accuracy, word token-level micro-averaged precision, recall, and F1 scores were used. These metrics account for the imbalance in idea classes. Despite often inaccurately predicting the length of idea spans, the model accurately predicted spans for many of the idea classes. Aggregating across all targeted ideas, we obtained a word-level micro-averaged precision of 0.71, and a recall of 0.51, yielding an F1-score of 0.60.

Analysis of students’ revisions indicated that overall, the dialogue supported students to revise their explanations: 91% of the students (87/96) revised their initial explanation after engaging in two rounds of dialogue. In contrast, in our prior research only 69% of students revised in response to two rounds of knowledge integration adaptive guidance (Gerard & Linn, 2022). Additional studies report that under 50% of students engage in sustained revision when the activity calls for it (e.g. Zhu et al., 2020).

The analysis of student revision moves suggests that the dialogue effectively elicited student ideas. In response to the adaptive first prompt in the dialogue, 36 of the students (68%) added a new idea to elaborate the mechanism underlying their initial explanation. In response to the generic second prompt (same for all students), 16 (30%) of these students added another new variable they considered relevant, and 6 (11%) distinguished a part of the mechanism from their initial explanation that they were uncertain about. Twenty-five (48%) of the students however struggled to recognize another relevant idea in response to the generic prompt (e.g., “I’m not sure; IDK”). This could be due to the generic nature of the prompt or because it is the second prompt.

After the dialogue, students were encouraged to consolidate the ideas they raised in the dialogue and revise their initial response. When revising, 24 (46%) of the students integrated an idea that they had raised in the dialogue to explain more fully the mechanism they had initially expressed. Fifteen (28%) expressed another new variable, in addition to those raised in the dialogue, in their revised explanation. Overall, the dialogue elicited an increasing number of ideas each student held about the problem. The adaptivity of the prompt in the first round was more effective than the generic prompt in the second round in supporting students to recognize and express the relevant ideas they held.

Discussion

These early findings suggest that asking adaptive knowledge integration oriented questions in a computer generated dialogue can encourage students to take steps toward integrated revision of their ideas. The dialogue supported students to generate many ideas, developed from prior experiences as consideration for solving the science dilemma. Once students added new ideas they either distinguished among these, or used the new idea to buttress or modify their initial ideas. Guiding students to reflect on the different ideas they have, initiates a process in which students raise scientific questions and consider new variables. This may position students to further explore the variables or seek answers in subsequent instruction.

- Linn, M.C., Gerard, L.F., & Donnelly-Hermosillo, D. (in press). Synergies between learning technologies and learning sciences: Promoting Equitable Secondary Science Education. Handbook of Research on Science Education III. Routledge Press. https://drive.google.com/file/d/1Zfx2CMSn37XusCzHsjgLCKfAQMob_iou/view?usp=sharing

- Wiley, K., Gerard, L., Bradford, A., & Linn, M.C. (in press). Empowering teachers and promoting equity in science. Handbook of Educational Psychology. Oxford Press. https://drive.google.com/file/d/187eZIBiNmatycNAVTUKd9YLdV3lSx3xK/view?usp=sharing

- Gerard, L., Bichler, S., Bradford, A., & Linn, M.C., Steimel, K., & Riordan, B. (2022). Designing an Adaptive Dialogue for Promoting Science Understanding. In Oshima J. Mochizuki, T. & Hayashi,Y. (Eds.) General Proceedings of the 2nd Annual Meeting of the International Society of the Learning Sciences. Hiroshima, Japan: International Society of the Learning Sciences. https://www.dropbox.com/s/ws5sdcfi72aykj1/ICLS2022%20Proceedings.pdf?dl=0

- Bradford, A., Li, W., Gerard, L., Steimel, K., Riordan, B., Lim-Breitbart, J., & Linn, M.C. (2023). Applying idea detection in dialog designed to support integrated revision. Paper to be presented in symposium: AI-Augmented Assessment Tools to Support Instruction in STEM, at the 2023 American Education Research Association (AERA) annual meeting, Chicago, IL.

- Gerard, L., Bradford, A., Steimel, K., Riordan, B., & Linn, M.C. (2023). Designing adaptive dialogs in inquiry learning environments to promote science learning. Paper to be presented in symposium: Automated Analysis of Students’ Science Explanations: Techniques, Issues, and Challenges, at the 2023 American Education Research Association (AERA) annual meeting, Chicago, IL.

- Li, W., Bradford, A., & Gerard, L. (2023). Responding to students’ science ideas in a Natural Language Processing based Adaptive Dialogue. Paper to be presented at the 2023 American Education Research Association (AERA) annual meeting, Chicago, IL

- Linn, Marcia C. Enhancing Teacher Guidance with an Authoring and Customizing Environment (ACE) to Promote Student Knowledge Integration (KI). 20th Shanghai International Curriculum Forum. Shanghai, China (November 11, 2022). [by Zoom]

- Linn, Marcia C. Leveraging Digital Technologies to Promote Knowledge Integration and Strengthen Teaching and Learning. International Conference on Digital Transformation in K-12 Education, Shanghai, China (November 2022). [by Zoom]

- Linn, Marcia C. Adaptive scaffolding to promote science learning. Ludwig Maximilian University, Munich. (September 20, 2022)

- Linn, Marcia C. Honored as a Woman who Leads by Scientific Adventures for Girls, Panel discussion. (November 8, 2022)

- Linn, Marcia C. AI Based Assessments: Design, Validity, and Impact Keynote AI and Education Conference (NSF funded). University of Georgia, Athens, Georgia. (May 27, 2022)

- 2022 NSF STEM for All Video Showcase (Facilitators’ Choice Award)

Related Resources

- CADRE Spotlights:

- Resources from AI CIRCLS:

- AI and Education Policy Reading List: The AI and Education Policy Reading List is an annotated compilation of websites, primers, reports, academic articles, and databases that were shared by Exchange members over the course of our sessions. The reading list focuses on the intersections of policy, AI, and education in global and national settings, and provides additional starting points for learning about AI privacy and AI resources for educators.

- Identification of Priority Areas in AI and Ed Policy: As a result of the discussions surrounding the Speaker Sessions, members identified four high priority concerns in AI and Education Policy: transparency and disclosure, communication and user agency, AI literacy, and ethics and equity. Members created a taxonomy of potential actions to take surrounding those concerns.

- Reading List: Machine Learning and Artificial Intelligence in Education–A Critical Perspective

- STELAR Publication: Artificial Intelligence and Learning: NSF ITEST Projects At-A-Glance

- The Office of Educational Technology (OET) Blog Posts and Listening Sessions on the Topic of Artificial Intelligence: The OET is working to develop policies and supports focused on the effective, safe, and fair use of AI-enabled educational technology.